Alibaba's Qwen and OpenAI face a new competition when DeepSeek launches a new AI model

By : Sandhya

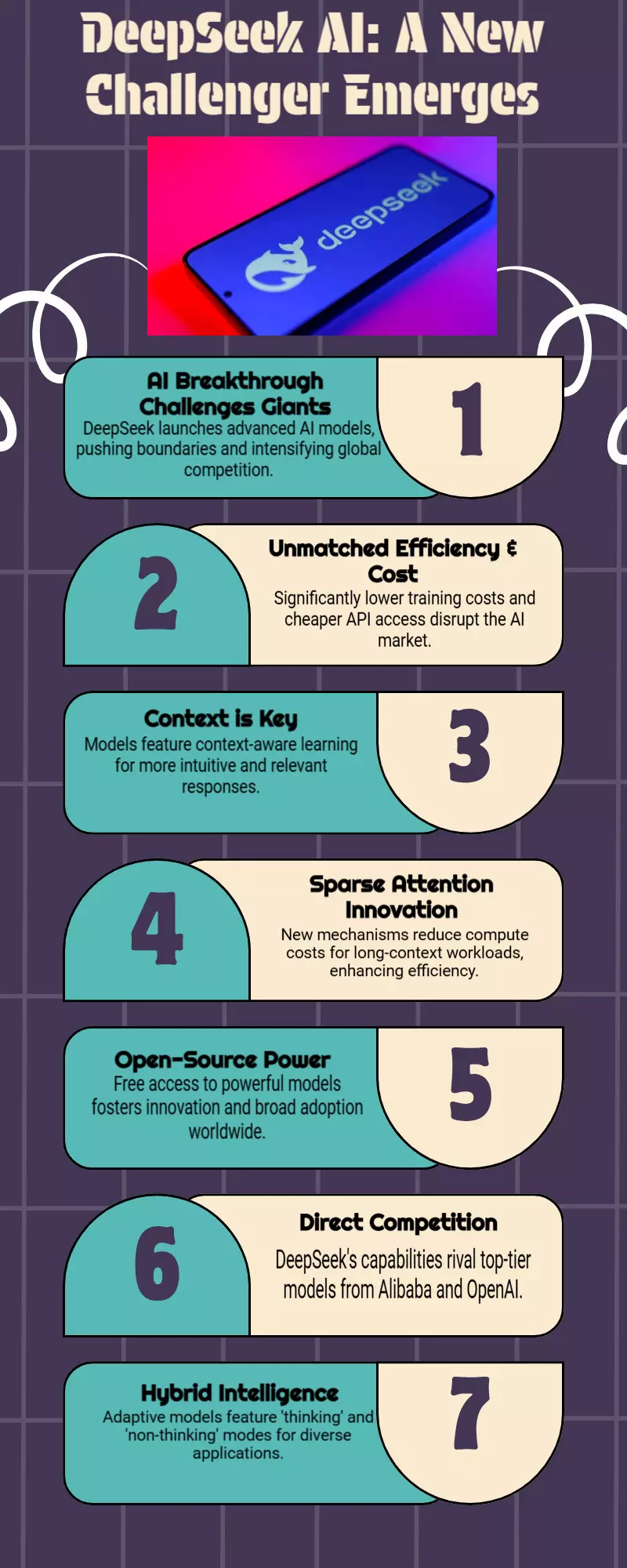

The Chinese AI firm DeepSeek has unveiled its most recent "experimental" AI model, which it claims is more effective at processing lengthy text sequences and easier to train than earlier versions of its LLMs. In a post on the developer community Hugging Face, the Hangzhou-based company referred to DeepSeek-V3.2-Exp as a "intermediate step toward our next-generation architecture."

Given that V3 and R1 startled Silicon Valley and international tech investors, that architecture is probably going to be DeepSeek's most significant product release. The Chinese company claims that the DeepSeek Sparse Attention mechanism, which is part of the V3.2-Exp model, can reduce computation costs and improve the performance of specific model types. In a Monday post on X, DeepSeek said that it was slashing API charges by "50%."

If DeepSeek's next-generation architecture can replicate the performance of DeepSeek R1 and V3, it could still exert tremendous pressure on its domestic competitors, such as Alibaba's Qwen, and US counterparts, such as OpenAI, even though it is unlikely to rock markets as much as earlier iterations did in January.